Book of Abstracts

(pdf, 15.5 MB)

- Writing and Rewriting: The Colored Digital Visualization of Keystroke Logging

- Leseutgave av Hrafnkels saga, Menotas koding og knytting til andre ressurser

- Tagging Named Entities in 19th century Finnish Newspaper Material with a Variety of Tools

- The Prior-project: From Archive Boxes to a Research Community

- Text Mining the History of Information Politics Through Thousands of Swedish Governmental Official Reports

- Teaching and learning the mindset of the digital historian and more…

- Vectors or Bit Maps? Brief Reflection on Aesthetics of the Digital in Comics

- New multi-language digitised onewspapers and journals from Finland available as data exports for Nordic researchers

- Spatiality, Tactility and Proprioception in Participatory Art

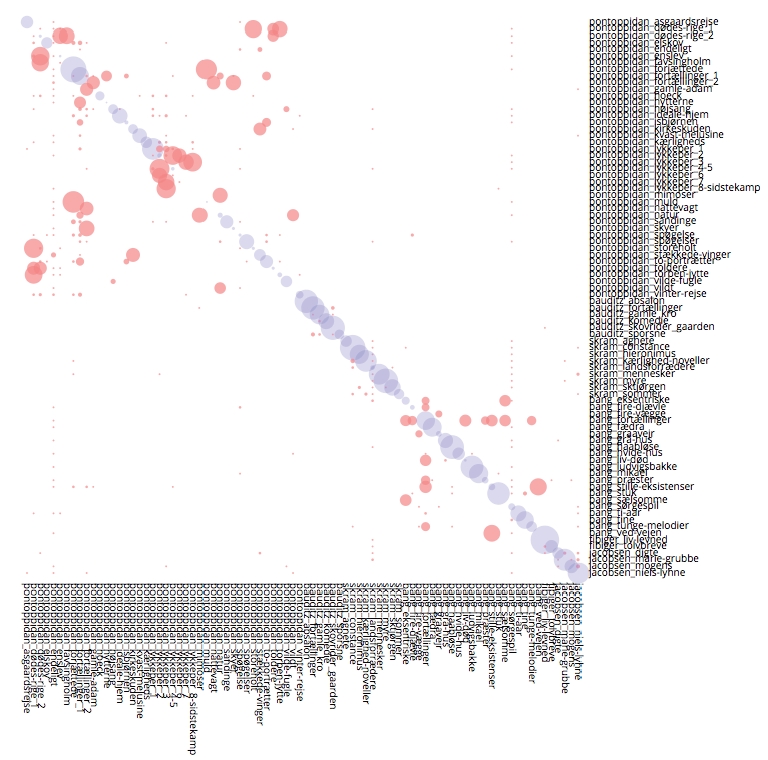

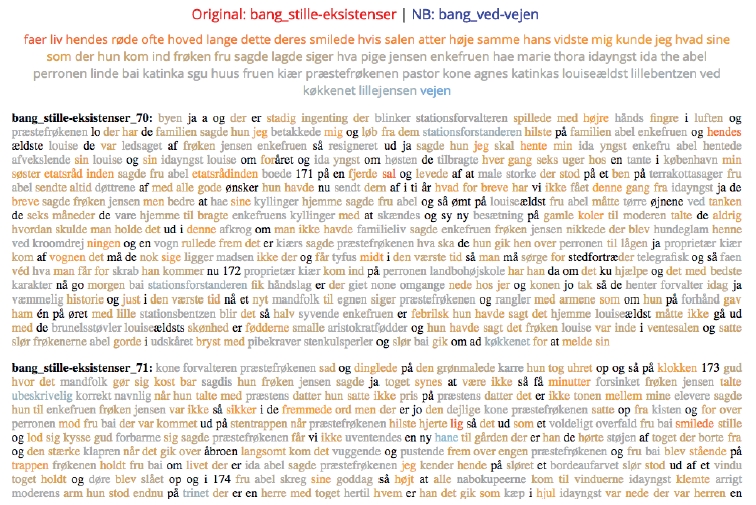

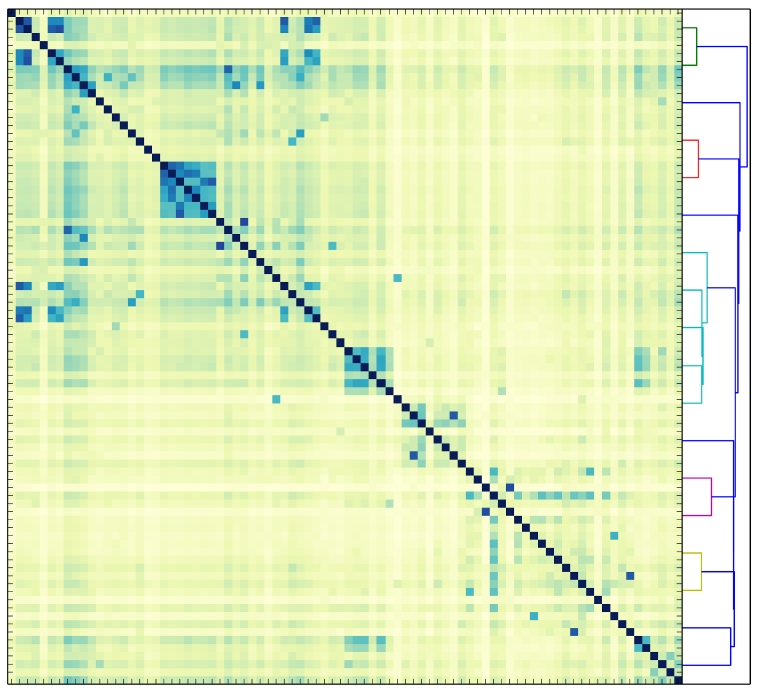

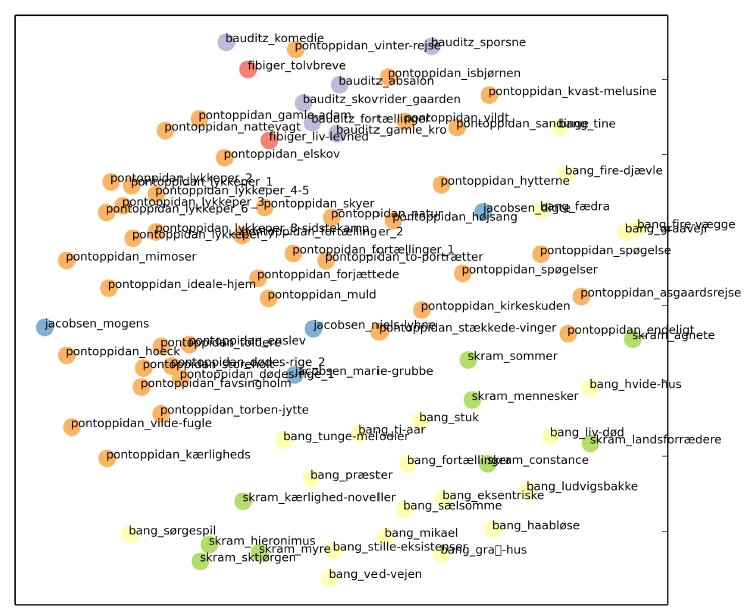

- Confusing the Modern Breakthrough: Naïve Bayes Classification of Authors and Works

- Topical Discourse Networks: Methodological Approaches to Turkish Foreign Policy in Sub-Saharan Africa

- Long-Range Information Dependencies and Semantic Divergence Indicate Author Kehre

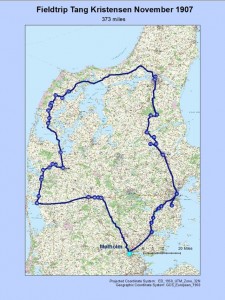

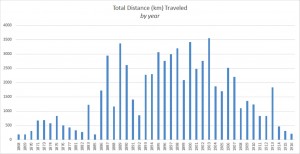

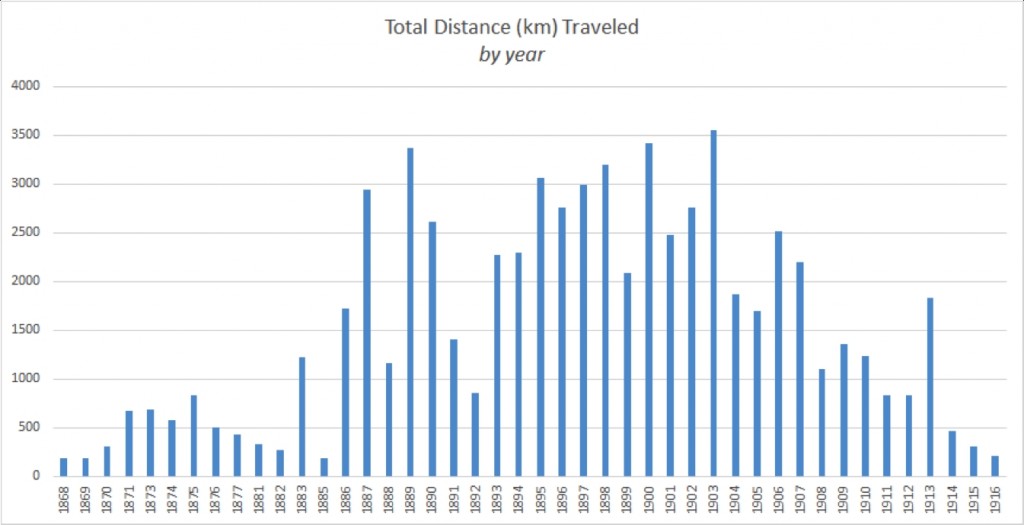

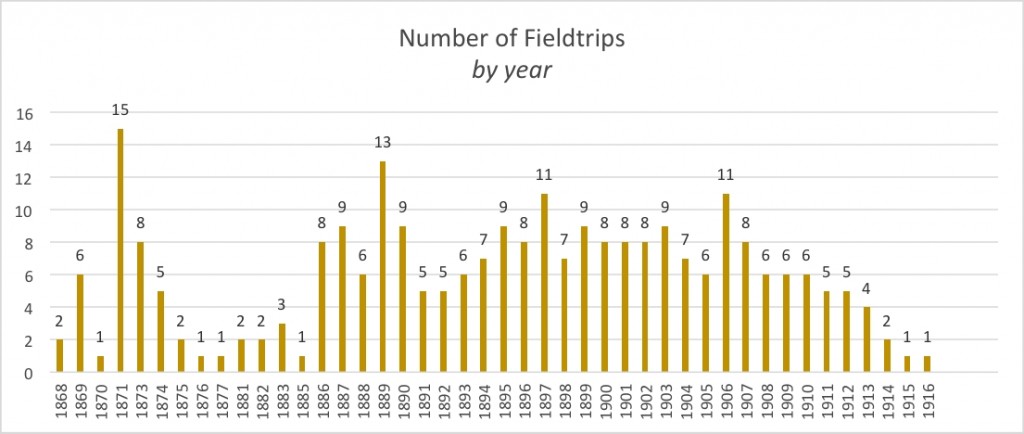

- En temmelig lang fodquantur: hGIS and Folklore Collection in 19th Century Denmark

- Word Spotting as a Tool for Scribal Attribution

- The new Lexicon Poeticum

- Life based design for human researchers

- Visualising genre relationships in Icelandic manuscripts

- Finnish Internet Parsebank- A web-crawled corpus of Finnish with syntactic analyses

- Exploring User Engagement in Crowdsourcing Folk Traditions

- The Elias Lönnrot Letters Online – Challenges of Multidisciplinary Source Material

- Mapping the development of Digital History in Finland

- The Trading Faces online exhibition and its strategies of public engagement

- The Corpus of American Danish: A corpus of multilingual spoken heritage Danish and corpus-based speaker profiles as a way to tackle the chaos

- Bokhylla: A Case Study of the First Complete National Literature Database in the World

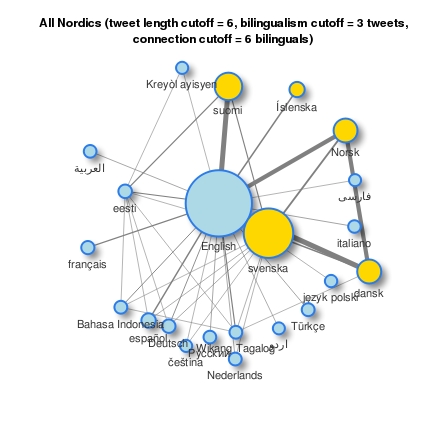

- Multilingual Clusters and Gender in Nordic Twitter

- The Digital Experience: Technology and Representation

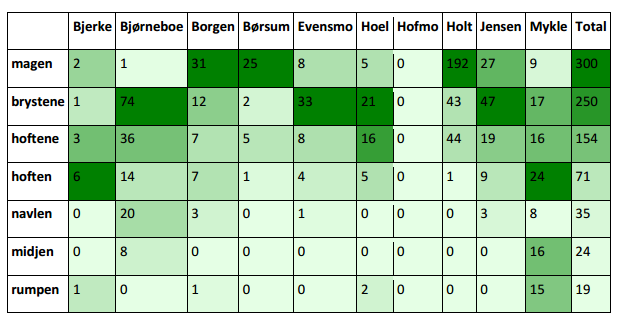

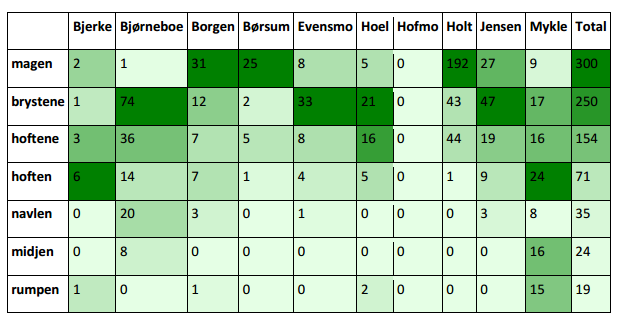

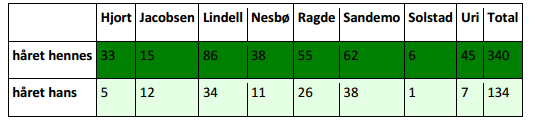

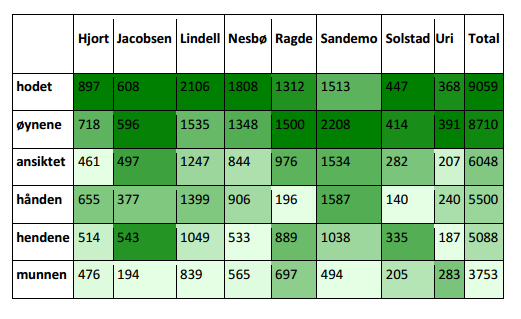

- Body parts in Norwegian books

- Representations: The analogue photography as a digital source

- Rhythms of fear and joy in Suomi24 discussions

- “Reading Moravian Lives: Overcoming Challenges in Transcribing and Digitizing Archival Memoirs”

- Towards a reader-friendly digital scholarly edition

- Presenting the Project: ‘Socio-Economic Relations in Ptolemaic Pathyris: A Network Analytical Approach to a Bilingual Community

- Towards a digital edition of the Codex Regius of the Prose Edda: Philosophy, method, and some innovative tools

- Thoughts about life in Dalarna – Young people’s cultural heritage and ideals in the face of digitized sources

- Málið.is: An Icelandic web portal for dissemination of information on language and usage

- How to study online popular discourse on otherness – Public user interfaces to online discussion forum materials

- [Re]use of Medieval Paintings in the Network Society: A Study of Ethics

- The use of medical visualisation in cultural heritage exhibitions

- The space between: The usefulness of semi-distant readings and combined research methods in literary analysis

- Multidisciplinary Terminology Work in the Humanities: New Form of Collaborative Writing

- The battle of the text – Quantitative methodologies in literary studies

- “These Memories Won’t Last:” Visual Representations of the Forgotten

- Mischievous machines: a design criticism of programmable partners

- From theory to practice: The Sett i gang web portal

- Places and journeys of the contemporary Norwegian novel: A pilot study

- What’s missing in this picture? Political change and wordscapes of Latvian poetry

- Automated improvement of search in low quality OCR using Word2Vec

- Digitization of literary fiction. Example of Jan Potocki’s The Manuscript Found in Saragossa.

- Visualizing the landscape of contemporary Norwegian novels

- Contributing to Nordic Cultural Commons through Hackathons

- Combining data sources for language variation studies and data visualization

- Spatial Humanities and the Norwegian Folklore Archive.

- Senses and emotion of early-modern and modern handicrafts – digital history approach

- The Afterlife of Early Modern Portraiture in Digitized Museum Collections: Discovering Conventions and Forgotten Images

- Organizational and educational issues in representing history through a series of data sprints on visual data from an API

- Sixties Biopoetics: A Media Archaeological Reading of Digital Infrastructure

- Mapping letters across editions

- Digital reconstructions as a scholarly tool. What are we learning?

- Reading Through the Machines: Epistemology, Media Archaeology and the Digital Humanities

- Digitizing Industrial Heritage: Models and Methods in the Digital Humanities

- New Research on Digital Newspaper Collections

- The Nordic Hub of DARIAH-EU: A DH Ecosystem of Cross-Disciplinary Approaches

- Web Archives: What’s in them for Digital humanists? Panel on Web Archiving in the Nordic Countries

- Geospatial networking of contents

- From Online Research Ethics to Researching Online Ethics

- Staging the Medieval Religious Play in Virtual Reality

- Mapping Language Vitality

- Interdisciplinary collaboration for making cultural heritage accessible for research

- Towards a Material Politics of Intensity – Mimetic, Virtual and Anarchistic Assemblages of Becoming-Non-Human/Machine in Minecraft

- Collecting Speech Data over the Internet

- Creating Children’s Books in the context of Pokémon Go, Museums and Cultural Heritage

- Working with digital newspapers

- Automatic identification of metric patterns in brazilian literature

- Enemies of books

- The Cultural Heritage HPC Cluster

- Use of Digital Methods to Switch Identity-related Properties

- Prozhito: Private Diaries Database

- Developing a repository and suite of tools for Scandinavian literature

- Data Management for Humanities Scholars – an introduction to Data Management Plans and the Cultural Heritage Data Reuse Charter

- Transkribus: Handwritten Text Recognition technology for historical documents

- Higher Education Programs in Digital Humanities: Challenges and Perspectives

Long papers

——– LONG PAPER PRESENTATION ——–

Topics: Visual and Multisensory Representations of Past and Present

Keywords: Classification, Keystroke logging, Digital colored visualization, Textgenetics, Time-oriented production

Writing and Rewriting: The Colored Digital Visualization of Keystroke Logging

Christophe Leblay1, Gilles Caporossi2

1University of Turku, Finland; 2HEC Montréal, Canada

As they contain a lot of data, keystroke logging files, are difficult to read and analyze (Wengelin, et al., 2009). There are many reasons for this, including their chronological format and high number of complex details (Kollberg, 1996). However, representations of writing are, so far, one of the main tools used to analyze it. The reason why analyzing the writing process is so important derives from the genetic methodology, where the more a text is changed or modified, the better it becomes (Leblay, 2011). The ultimate goal then becomes to understand how modifications continue to improve the text and how modifications are done in order to understand the way the text continuously improves.

The goal of data representations is to help researchers with their analysis, to assist them in understanding the data and finding patterns in it. Visualization is more than just drawings of data; it is an analysis tool (Manyika, et al., 2011). Seeing how data interacts makes it possible to discover and understand patterns and changes over time within a database (Minelli, et al., 2013; Yau, 2011). For a researcher to use representations in a way that does more than just describe a dataset requires visualization techniques. These techniques are multidisciplinary and include statistics, cognitive science, graphic design, computer science and cartography (Kirk, 2012), in addition to textgenetic analysis.

It is important to consider two complementary concepts on the same visual surface when creating data visualizations, namely, data representation (visual variables in the creation of graphs or charts) and data presentation (appearance and delivery format of the entire data visualization design, colors, the interactive features and the annotations). (Aligner, et al., 2011)

The writing process is difficult to grasp as a whole. From a computer science and mathematics standpoint, there are only two dimensions to this process: the temporal dimension, involving the specific moment when each operation was made; and the spatial one, which corresponds to the exact position of the operation in the list. Because this definition is highly decontextualized, some writing process representations also use a third dimension, chronology, which is a simplification of the temporal aspect (Bécotte-Boutin, Caporossi & Hertz, 2015). The writer adds and removes characters chronologically in time, but the overall state of the text changes as the writer modifies it. Genetic criticism studies precisely the different states of the text. Those three dimensions then concern genetic operations at the most basic level. Each operation of the writing process can be considered as a substitution operation (Van Waes & Schellens, 2003). An insertion would be the replacement of an empty space by a keystroke, and the deletion or replacement of a keystroke by an empty space. These operations are characterized by the fact they are done in a single step with the mouse or keyboard. More complex operations, such as substitution and replacement, which are done in two steps (Caporossi & Leblay, 2011), are considered to be combinations of the simple operations.

Another aspect of the writing process is the micro and macro aspects of the text, i.e., the detailed operations performed and the process’overall structure. Because those two aspects cannot be visualized together in the same representation unless interactivity and the view adjustment feature are used (Aigner, et al., 2011) researchers usually use several representations to understand the process more completely (Alamargot, et al., 2011; Breetvelt, et al., 1994; Caporossi & Leblay, 2011; Cox, et al., 2009; Doquet-Lacoste, 2003; Haas, 1989; Latif, 2008; Leijten & Van Waes, 2013; Southavilay, et al., 2013; Van Waes & Schellens, 2003).

Actual visualizations of the writing process are bidimensional, and because of that, they focus for example on revision, the temporal aspect or the writer’s retrospection (Latif, 2008). Even if it is important to analyze and understand the spatiotemporal dimension of the process (Stromqvist, et al., 2006), none of the actual visualizations represent the problem completely.

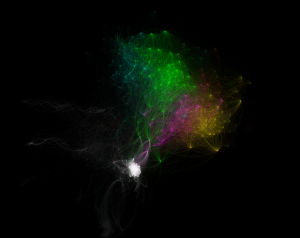

We propose new visualizations based on mathematical graphs that consist of nodes (points) and edges (lines eventually joining the nodes). As such, graphs are based on relationships between nodes and may be used for modeling purposes. This colored representation is halfway between detailed representations and overviews. The dynamic aspect of the writing process is highlighted (Caporossi & Leblay, 2011; Leblay & Caporossi, 2014). One of its strength is that it clearly shows the temporal and chronological relationships between operations, facilitating their identification in a structured way. Another advantage of this visualization of the writing process is that it “can handle moving text positions” (Southavilay, et al., 2013).

Bibliography

Aigner, W., Miksch, S., Schumann, H., & Tominski, C. (2011). Visualization of Time-Oriented Data. Human-Computer Interaction Series. London: Springer.

Alamargot, D., Caporossi, G., Chesnet, D., & Ros, C. (2011). What makes a skilled writer? Working memory and audience awareness during text composition. Learning and Individual Differences, 21 (5), 505-516.

Breetvelt, I., Van Den Bergh, H., & Rijlaarsdam, G. (1994). Relations between Writing Processes and Text Quality: When and How. Cognition and Instruction, 12 (2), 103-123.

Caporossi, G., & Leblay, C. (2011). Online Writing Data Representation: A Graph Theory Approach. In Lecture Notes in Computer Sciences 7014, 80-89.

Cox, M., Ortmeier-Hopper, C., & Tirabassi, K. E. (2009). Teaching Writing for the “Real World”: Community and Workplace Writing. The English Journal, 98 (5), 72-80.

Doquet-Lacoste, C. (2003). Étude Génétique de l’Écriture sur Traitement de Texte d’Élèves de Cours Moyen 2, Année 1995-1996. Paris: Université Sorbonne nouvelle.

Haas, C. (1989). How the Writing Medium Shapes the Writing Process: Effects of Word Processing on Planning. Research in the Teaching of English, 23 (2), 181-207.

Kirk, A. (2012). Data visualization: a successful design process [electronic book]. Packt Pub.

Kollberg, P. (1996). Rules for the S-notation: a computer-based method for representing revisions. Stockholm, Sweden: IPLab, Royal Institute of Technology (KTH).

Latif, M. M. (2008). A State-of-the-Art Review of the Real-Time Computer-Aided Study of the Writing Process. International Journal of English Studies, 8 (1), 29-50.

Leijten, M., & Van Waes, L. (2013). Keystroke Logging in Writing Research: Using Inputlog to Analyze and Visualize Writing Processes. 30 (3), 358-392.

Leblay, C. & Caporossi, G. (2014). Temps de l’écriture: enregistrements et représentations. Louvain-la-Neuve: Academia.

Manyika, J., Chui, M., Brown, B., Bughin, J., Dobbs, R., Roxburgh, C., et al. (2011). Big data: the next frontier for innovation, competition, and productivity. McKinsey Global Institute.

Minelli, M., Chambers, M., & Dhiraj, A. (2013). Big data, big analytics: Emerging business intelligence and analytic trends for today’s businesses. Wiley Publishing.

Southavilay, V., Yacef, K., Reimann, P., & Calvo, R. A. (2013). Analysis of Collaborative Writing Processes Using Revision Maps and Probabilistic Topic Models. Proceedings of the Third International Conference on Learning Analytics and Knowledge, 38-47.

Stromqvist, S., Holmqvist, K., Johansson, V., Karlsson, H., & Wengelin, A. (2006). What Keystroke Logging can Reveal about Writing. In K. P. Lindgren (Ed.), Computer Keystroke Logging and Writing. Elsevier, 45-71.

Van Waes, L., & Schellens, P. J. (2003). Writing Profiles: The Effect of the Writing Mode on Pausing and Revision Patterns of Experienced Writers. Journal of Pragmatics, 35, 829-853.

Wengelin, A., Torrance, M., Holmqvist, K., Simpson, S., Galbraith, D., Johansson, V., et al. (2009). Combined eyetracking and keystroke-logging methods for studying cognitive processes in text production. Behavior Research Methods, 41 (2), 337-351.

Yau, N. (2011). Visualize this: the flowing data guide to design, visualization and statistics. Indianapolis: Wiley Publishing.

——– LONG PAPER PRESENTATION ——–

Topics: Nordic Textual Resources and Practices

Keywords: digital edition, working with online resources, teaching

Leseutgave av Hrafnkels saga, Menotas koding og knytting til andre ressurser

Fabian Schwabe

Eberhard Karls Universität Tübingen, Germany

Når man arbeider på ei digital utgave av en norrønt tekst trenges det å tenke på ei ordentlig koding av denne teksten slik at man ikke bare kan vise teksten selv på ei nettside, men også har teksten i en format som er forståelig for andre og kan benyttes av dem. På dette feltet er det sikkert lurt å kaste et nøyet blikk på anbefalingene til Medieval Nordic Text Archive (Menota). Der finnes det et utarbeidet foreslag, hvordan man kunne bruke ei XML-koding til å beskrive norrøne håndskrifter og lagre deres innhold.

Denne kodinga bygger opp på foreslagene til Text Encoding Initiative (TEI) som nå har utviklet seg som en standard til å kode tekster innenfor humaniora. Forskjellen mellom kodingssystemene er at TEI prøver å by ei mer allmenn koding for nesten alle tekstsjanger man kan tenke seg, mens Menota har momentan et veldig begrenset anvendelsesområde i blikket, fordi dets fokus ligger på det enkelte håndskriftet og lemmatiseringa av ordformene brukt i dette håndskriftet. Men dette vil bare være begynnelsen. Odd Einar Haugen, en av initiativtakene til Menota, beskriver i artikkelen sin Stitching the Text Together (Haugen 2010) at på grunnlaget av håndskriftene i form av enkle dokumentariske edisjoner kan det oppstå en (ny) eklektisk edisjon av en tekst. Når den skal være digital, må edisjonen en gang til bli kodet. Momentan er dette ikke inn i målene til Menota, men på lang sikt vil det utvilsom komme inn som det ble omdiskutert i Haugens artikkel.

I stedet for å vente på ei XML-koding av alle relevante håndskrifter til en tekst til å lage en eklektisk edisjon, kan man også lage litt mindre ambisjonerte leseutgaver til tekstene som møter en stor interesse eller har ei stor betydning innenfor den norrøne filologien, i forskning eller undervisning. Med henblikk til undervisning av nybegynnere av det norrøne språket jobbet jeg med ei slik leseutgave til Hrafnkels saga Freysgoða. Målet av utgava er en grammatikalsk og semantisk selvforklarende tekst. Det vil si at hver eneste ordform i sagateksten ble eller blir lemmatisert slik at den språkinteresserte leseren får nok hjelp for å forstå syntaksen og betydninga av ordene.

I tillegg er alle ord knyttet til ordbøkene til Fritzner og Cleasby/Vigfusson som gir oversettelser til norsk respektive engelsk, og til Noreens grammatikk som gir mer informasjon om deklinasjonen til ord og enkelte ordformer. Knyttinga fungerer for største delen med enkle lenker. Det går ganske bra med Noreens grammattiken som Andrea de Leeuw van Weenen har overført til ei HTML-fil, og Fritzners ordbok som ble organisert som en database av prosjektet Eining for digital dokumentasjon (EDD). Ordboka til Cleasby/Vigfusson ble overført til ei fil med enkel markup av Sean Crist av prosjektet Germanic Lexicon. Knyttinger til nettsida av prosjektet er bare ei mellomløsning, til bearbeidelsen min av dataene i fila som er fri tilgjengelig, er ferdig gjort. Jeg skal jobbe på å vise bare de relevante ordbokartiklene i en klar og enkel layout. I den omtalte edisjonen er nå bestemt omtrent 90% av ordene; edisjonen eller leseutgava som jeg kaller den, finnes under http://ecenter.uni-tuebingen.de/hrafnkels-saga/start.html.

Den digitale teksten med alle språklige annotasjoner er kodet som XML etter standarden til Menota, mens Ordbog over det norrøne prosasprog er grunnlaget for normaliseringa. Lemmatisering er lagt etter kodingssystemet til Menota, men for å rekke målene måtte det bli utvidet slik at den grammatikalske kodinga kunne være mer detaljert. I Menotas kodingssystem er det mulig å klassifisere verb som svake, sterke eller redupliserande, mens substantiver kan bare kategoriseres som vanlige eller egennavn. Når det gjelder preposisjoner og konjunksjoner er det mulig å bestemme dem ganske detaljert. Preposisjonen har en reksjon eller blir brukt adverbial. Konjunksjonene deles opp i subjunksjoner og konjunksjoner. Men i kodingssystemet var det ikke planlagt å bestemme bøyingsklassene til substantiver eller verb. Bøyingsklasser er svært interessant for nybegynnere, fordi de hjelper å identifisere ordformer og finne seg til rette i en norrøn tekst. Utvidelsen av kondingssystemet jeg gjorde, fører til at edisjonen kunne være knyttet til andre ressurser på nettet som atter forbedrer edisjonsteksten selv.

I øyeblikket blir det arbeidet på en revisjon av Menotas håndbok om XML-kodinga. Jeg har allerede meldt tilbake til Menota at det burde være mulig å være mer presis med beskrivinga av grammatikken. Sannsynligvis skal et resultat av revisjonen være denne utvidelsen. Med dette kodingssystemet har man et verktøy som ikke bare kan nyttes for å kode håndskrifter, men som nok er detaljert for å finne anvendelse i andre grammatikalsk orienterte prosjekter. I tillegg kan XML-kodinga til Menota blir et digitalt verktøy for forskjellige edisjonstyper (innenfor norrøn filologi), og ikke bare for dokumentariske edisjoner som i dag kodinga brukes til.

Bibliography

– Cleasby, Richard og Gudbrand Vigfusson, An Icelandic-English Dictionary, Oxford 1874.

– Fritzner, Johan, Ordbog over det gamle norske sprog, 4 bind, 2. utgave, Kristiania 1883-96.

– Noreen, Adolf, Altisländische und altnorwegische Grammatik. Laut- und Flexionslehre unter Berücksichtigung des Urnordischen (Sammlung kurzer Grammatiken germanischer Dialekte A, 4), 4. utgave, Halle/Saale 1923.

– Haugen, Odd Einar, Stitching the Text Together: Documentary and Eclectic Editions in Old Norse Philology. In Quinn, Judy & Lethbridge, Emily (Hgg.), Creating the Medieval Saga: Versions, Variability and Editorial Interpretations of Old Norse Saga Literature, Viborg 2010, s. 39-65.

– Heimskringla.no, Hrafnkels saga Freysgoða etter Guðni Jónsson – http://heimskringla.no/wiki/Hrafnkels_saga_Freysgo%C3%B0a

– Leseutgave av Hrafnkels saga – http://ecenter.uni-tuebingen.de/hrafnkels-saga/start.html.

– Digital versjon av Altnordische Grammatik av Adolf Noreen – http://www.arnastofnun.is/solofile/1016380.

– EDD, Johan Fritzners ordbok – http://www.edd.uio.no/perl/search/search.cgi?appid=86&tabid=1275.

– Germanic Lexicon Project – http://lexicon.ff.cuni.cz/.

– Menotas håndbok 2.0 – http://menota.org/HB2_index.xml.

– TEI: P5 Guidelines – http://www.tei-c.org/Guidelines/P5/index.xml

——– LONG PAPER PRESENTATION ——–

Topics: Nordic Textual Resources and Practices

Keywords: named entity recognition, historical newspaper collections, Finnish

Tagging Named Entities in 19th century Finnish Newspaper Material with a Variety of Tools

Kimmo Kettunen and Teemu Ruokolainen

The National Library of Finland, Centre for Preservation and Digitization, Mikkeli, Finland

Abstract Named Entity Recognition (NER), search, classification and tagging of names and name like frequent informational elements in texts, has become a standard information extraction procedure for textual data. NER has been applied to many types of texts and different types of entities: newspapers, fiction, historical records, persons, locations, chemical compounds, protein families, animals etc. In general a NER system’s performance is genre and domain dependent and also used entity categories vary (Nadeau and Sekine, 2007). The most general set of named entities is usually some version of three partite categorization of locations, persons and organizations. In this paper we report evaluation of NER with data out of a digitized Finnish historical newspaper collection Digi (digi.kansalliskirjasto.fi). Experiments, results and discussion of this research serve development of the Web collection of historical Finnish newspapers.

Digi collection contains 1,960,921 pages of newspaper material from years 1771–1910 both in Finnish and Swedish. Total number of Finnish pages is 1 063 648, and total number of Swedish pages 892 101. We use only material of Finnish documents in our evaluation. The OCRed newspaper collection has lots of OCR errors; its estimated word level correctness is about 70–75 % (Kettunen and Pääkkönen, 2016). Our baseline NER tagger is a rule-based tagger of Finnish, FiNER, provided by the FIN-CLARIN consortium. Three other tools, ARPA, a semantic web linking tool, Finnish Semantic Tagger, and Connexor’s NER software are also evaluated. We report also development work of a statistical tagger of Finnish and a new evaluation and learning corpus for NER of historical Finnish.

Introduction

Digital newspapers and journals, either OCRed or born digital, form a growing global network of data that is available 24/7, and as such they are an important source of information. As the amount of digitized journalistic information grows, also tools for harvesting the information are needed. Named Entity Recognition (NER) has become one of the basic techniques for information extraction of texts since the mid-1990s (Nadeau and Sekine, 2007). In its initial form NER was used to find and mark semantic entities like person, location and organization in texts to enable information extraction related to this kind of material. Later on other types of extractable entities, like time, artefact, event and measure/numerical, have been added to the repertoires of NER software (Nadeau and Sekine, 2007). In this paper we report evaluation results of NER for historical 19th century Finnish. Our historical data consists of an evaluation collection out of an OCRed Finnish historical newspaper collection 1771–1910 (Kettunen and Pääkkönen, 2016).

Kettunen et al. (2016) have reported first NER evaluation results of the historical Finnish data with two tools, FiNER and ARPA. FiNER is provided by the Fin-CLARIN consortium, ARPA is a semantic web tool produced by the Semantic Computing group at the Aalto University. Both tools achieved maximal F-scores of about 60 at best, but with many categories the results were much weaker. Word level accuracy of the evaluation collection was about 73 %, and thus the data can be considered very noisy. NER results for modern Finnish have not been reported extensively so far. Silfverberg (2015) mentions a few results in his description of transferring an older version of FiNER to a new version. With modern Finnish data F-scores round 90 are achieved.

Results for the Historical Data

Our historical Finnish evaluation data consists of 75 931 lines of manually annotated newspaper text, one word per line. Most of the data is from the last decades of 19th century. Earlier NER evaluations with this data have achieved at best F-scores of 50–60 in some name categories (Kettunen et al., 2016). Our baseline tagger, FiNER, is described more in Kettunen et al. (2016). Shortly described, it is a rule-based NER tagger that uses morphological recognition, morphological disambiguation, gazetteers (name lists), pattern and context rules for name tagging.

Table 1 shows F-score results of four evaluations of locations and persons in our evaluation data. EnamexPrsHums contain both first names and last names; EnamexLocXxx is a general location category that combines three more refined location categories to one.

| <EnamexPrsHum> | <EnamexLocXxx> | |||

| F-score | Number of found tags | F-score | Number of found tags | |

| ARPA | 52.9 | 3636 | 52.4 | 2933 |

| Connexor | 56.4 | 5321 | 60.9 | 1802 |

| FiNER | 58.1 | 2681 | 57.5 | 1541 |

| FST | 51.1 | 1496 | 56.7 | 1253 |

Table 1. Evaluation of four tools with loose criteria and two name categories in the historical newspaper collection. Best results are in bold.

All taggers recognize locations and persons quite evenly, differences are small. Our baseline tagger FiNER achieves best F-score with persons, Connexor with locations. Performance of the taggers is quite bad, which is expectable as the data is very noisy.

| Locations | Persons | |

| ARPA right tag, word unrecognition rate | 1.9 | 4.5 |

| Connexor right tag, word unrecognition rate | 10.2 | 25.0 |

| FiNER right tag, word unrecognition rate | 6.3 | 12.8 |

| FST right tag, word unrecognition rate | 5.6 | 0.06 |

| ARPA wrong tag, word unrecognition rate | 22.7 | 29.3 |

| Connexor wrong tag, word unrecognition rate | 53.5 | 57.4 |

| FiNER wrong tag, word unrecognition rate | 38.3 | 34.0 |

| FST wrong tag, word unrecognition rate | 44.0 | 33.3 |

Table 2. Unrecognition rates for rightly and wrongly tagged words, per cent.

Development of a New Statistical Tagger

Our baseline tagger FiNER employed in the above experiments is a rule-based system utilizing morphological analysis, gazetteers, and pattern and context rules. However, while there does exist some recent work on rule-based systems for NER (Kokkinakis et al., 2014), the most prominent research on NER has focused on statistical machine learning methodology for a longer time (Nadeau and Sekine, 2007; Neudecker 2016). Therefore, we are currently developing a statistical NER tagger for historical Finnish text. For training and evaluation of the statistical system, we are manually annotating newspaper and magazine text from the years 1862–1910 with classes person, organization, and location. The text contains approximately 650,000 word tokens. Subsequent to annotation, we can utilize freely available toolkits, such as the Stanford Named Entity Recognizer (Finkel et al., 2005), for teaching the NER tagger. We expect that the rich feature sets enabled by statistical learning will alleviate the effect of poor OCR quality on the recognition accuracy of NEs. For recent work on statistical learning of NER taggers for historical data, see Neudecker (2016).

Discussion

In this paper we have shown results of NE tagging of historical OCRed Finnish with four tools: FiNER ARPA, a Finnish Semantic Tagger, the FST, and Connexor’s NE software. FiNER and Connexor’s tagger are dedicated NER tools for modern Finnish, but the FST is a general semantic tagger and ARPA a semantic web linking tool. Our results show that they all tag names of locations and persons almost at the same level in the noisy OCRed historical newspaper collection. FiNER is best with names of persons, Connexor with locations. Differences between tagger performances are at biggest 7–8 % points.

In general our results show that NE tagging in a noisy historical newspaper collection can be done to a reasonable extent with tools that have been developed for modern Finnish. Anyhow, it seems obvious, that better results could be achieved with a new tool, which is trained with the noisy historical data. We have ongoing development work with regards to this. We also try to improve the quality of our OCRed text data with new OCRing and post-correction. Together these should yield better NER results in the future.

Our main emphasis with NER will be to use the names with the newspaper collection as a means to improve structuring, browsing and general informational usability of the collection. A good enough coverage of the names with NER needs to be achieved also for this use, of course. A reasonable balance of P/R should be found for this purpose, but also other capabilities of the software need to be considered. These remain to be seen later, if we are able to connect functional NER to our historical newspaper collection’s user interface.

Acknowledgements

This work is funded by the EU Commission through its European Regional Development Fund, and the program Leverage from the EU 2014–2020.

References

Bates, M. (2007). What is Browsing – really? A Model Drawing from Behavioural Science Research. Information Research 12. http://www.informationr.net/ir/12-4/paper330.html.

Finkel, J.R., Grenager, T. and Manning, C. (2005). Incorporating non-local information into information extraction systems by Gibbs sampling. In Proceedings of the 43rd Annual Meeting on Association for Computational Linguistics (ACL 2005), 363–370, available at http://dl.acm.org/citation.cfm?id=1219885.

Kettunen, K., Mäkelä, E., Kuokkala, J., Ruokolainen, T. and Niemi, J. (2016). Modern Tools for Old Content – in Search of Named Entities in a Finnish OCRed Historical Newspaper Collection 1771-1910. LWDA 2016, available at: http://ceur-ws.org/Vol-1670/paper-35.pdf

Kettunen, K. and Pääkkönen, T. (2016). Measuring Lexical Quality of a Historical Finnish Newspaper Collection – Analysis of Garbled OCR Data with Basic Language Technology Tools and Means. In LREC 2016, Tenth International Conference on Language Resources and Evaluation, available at http://www.lrec-conf.org/proceedings/lrec2016/pdf/17_Paper.pdf.

Kokkinakis, D., Niemi, J., Hardwick, S., Lindén, K., and Borin. L. (2014). HFST-SweNER – a New NER Resource for Swedish. In Proceedings of LREC 2014, available at: http://www.lrec-conf.org/proceedings/lrec2014/pdf/391_Paper.pdf.

Löfberg, L., Piao, S., Rayson, P., Juntunen, J-P, Nykänen, A. and Varantola, K. (2005). A semantic tagger for the Finnish language, available at http://eprints.lancs.ac.uk/12685/1/cl2005_fst.pdf.

McNamee, P., Mayfield, J.C., and Piatko, C.D. (2011). Processing Named Entities in Text. Johns Hopkins APL Technical Digest, 30, 31–40.

Mac Kim, S., Cassidy, S. (2015). Finding Names in Trove: Named Entity Recognition for Australian. In Proceedings of Australasian Language Technology Association Workshop, available at https://aclweb.org/anthology/U/U15/U15-1007.pdf.

Manning, C. D., Schütze, H. (1999). Foundations of Statistical Language Processing. The MIT Press, Cambridge, Massachusetts.

Nadeau, D., and Sekine, S. (2007). A Survey of Named Entity Recognition and Classification. Linguisticae Investigationes, 30(1): 3–26.

Neudecker, C. (2016). An Open Corpus for Named Entity Recognition in Historic Newspapers. In LREC 2016, Tenth International Conference on Language Resources and Evaluation, available at http://www.lrec-conf.org/proceedings/lrec2016/pdf/110_Paper.pdf .

Poibeau, T. and Kosseim, L. (2001). Proper Name Extraction from Non-Journalistic Texts. Language and Computers, 37(1): 144–157.

Silfverberg, M. (2015). Reverse Engineering a Rule-Based Finnish Named Entity Recognizer. Paper presented at Named Entity Recognition in Digital Humanities Workshop, June 15, Helsinki available at: https://kitwiki.csc.fi/twiki/pub/FinCLARIN/KielipankkiEventNERWorkshop2015/Silfverberg_presentation.pdf

Toms, E.G. (2000). Understanding and Facilitating the Browsing of Electronic Text. International Journal of Human-Computer Studies, 52, 423–452.

——– LONG PAPER PRESENTATION ——–

Topics: The Digital, the Humanities, and the Philosophies of Technology

Keywords: Digital Epistemology, Domain Analysis, Ontology, Research Infrastructure, Virtual Closed Collaborative Community.

The Prior-project: From Archive Boxes to a Research Community

Volkmar Engerer, Henriette Roued-Cunliffe, Jørgen Albretsen, Per Hasle

Royal School of Library and Information Science, University of Copenhagen, Denmark

Introduction

A very important part of Digital Humanities (DH) is the development, use and discussion of digital research infrastructures within the humanities field. In fact, the notion of DH is often almost identified with this kind of endeavours. We ourselves think that such a conception is too narrow and misses important points, but we shall not attempt a general discussion here. However, it is important to note that data and representations within the humanities are often more heterogeneous and more dependent on domain expertise than are datasets within the STEM and in fact even the social sciences, whose datasets tend to be more regular and more amenable to standardized tools for storing, retrieving, analysing and visualising.

In this paper, we present a DH research infrastructure which relies heavily on a combination of domain knowledge with information technology. The general goal is to develop tools to aid scholars in their interpretations and understanding of temporal logic. This in turn is based on an extensive digitisation of Arthur Prior’s Nachlass kept in the Bodleian Library, Oxford. The DH infrastructure in question is the Prior Virtual Lab (PVL). PVL was established in 2011 in order to provide researchers in the field of temporal logic easy access to the papers of Arthur Norman Prior (1914-1969), and officially launched together with Prior’s Nachlass at the Arthur Prior Centenary Conference at Balliol College, Oxford, in August 2014 (Arthur Prior Centenary Conference 2014; Albretsen et al. 2016a).

Prior was a distinguished logician, philosopher, and in his younger years also a theologian. He is best known for his work on time and for being the founding father of modern temporal logic, beginning in New Zealand in the early 1950s. In 1956 he presented his ideas at the John Locke Lectures in Oxford. Following this he took up a professorship in Manchester (1959-1965) and was later appointed Reader in the University of Oxford and Fellow of Balliol College (1966-1969). Prior died, age 55, from a heart attack, while on a lecturing tour in Norway (Priorstudies 2016).

Prior’s archive is now kept in the Bodleian Library in Oxford and is still subject to copyright. Following an agreement between the team behind PVL, the Prior family, and the Bodleian Library, the team has been permitted to take digital photos of the archive material. This work is ongoing and has currently resulted in approx. 7000 photos, which have been reassembled in the PVL so that they mirror the original documents, integrating a facility for transcribing them and adding user comments (PVL 2016).

Current Prior Virtual Lab

The restricted access has inspired the term Virtual Closed Collaborative Community. To get access a potential new user must first contact the project team and ask for login, stating her or his areas of research and in which ways that person’s work in the PVL can add to the collaborative effort of publishing Prior’s Nachlass – the digital edition of Prior’s hitherto unpublished papers as well as other relevant material such as correspondence between Prior and other researchers, Prior’s notebooks and scrapbooks etc. In PVL users can follow each other’s progress and add comments to on-going transcriptions (Albretsen et al. 2016b). After an editorial process the transcribed texts are made available in PDF format combined with a prototype search facility (Nachlass 2016). Those users who have contributed to the transcription are credited in the footnotes of each transcribed edition. Our experience to date is that the current PVL is in need of enhanced search facilities combined with underlying metadata structures in the Nachlass.

Digital Humanities project

Prior’s archive includes various documents such as drafts of philosophical essays, letter correspondence between Prior and other scholars, or sudden ideas scribbled as hand-written notes. These documents are currently used as information sources about Prior’s convictions, theories, his life, relations to colleagues etc. However, when digitised, transcribed, and connected to each other through a database structure, they become a research object in their own right. Because of this transformation, Prior scholars can now explore patterns in the structure of the documents that were not visible before.

The further development of PVL is headed by a research group (the authors of this abstract) at the Royal School of Library and Information Science, University of Copenhagen. This activity forms an important part of the “Prior project”, which 2016 received funding from the Danish Council for Independent Research | Humanities to carry out the research project The Primacy of Tense: A.N. Prior Now and Then, duration three years (DFF Grant 2016). The further development of PVL will be split into work on the data repository and the interface as two separate entities. The team behind PVL are to varying degrees Prior scholars, digital humanists, information scientists, and database engineers. Moreover, we have a vivid exchange with the other project researchers as well as the users of PVL. The project aims to combine this cross-disciplinary expertise in order to integrate community-specific practices of Prior scholars into the data structures and interfaces of the digital tools they use. It must be said that the information behaviour of the users has not yet been studied systematically. Such a study is one of the points within our project plan.

Data repository

In order to extend the existing facilities of the PVL it is necessary to offer a data and query structure that enables Prior scholars to explore the documents with varying and flexible parameters such as references to logicians, publications being mentioned and theories discussed. The new PVL architecture aims to separate the data structure from the interface and to develop a sustainable dataset that is suitable for both new and future interface designs. It applies traditional information science knowledge (mostly generated in the library domain) to the data repository, drawing on insights from indexing theory, metadata research, knowledge organization, information retrieval, and theories of information seeking.

A further refinement of the PVL’s data structure can be achieved by ontologies. The information scientific concept of an ontology encompasses the sphere of indexing terms and related search terminology at the same time, and therefore regards index terms as closely related to the vocabulary used by specialists in their domain. The step from traditional thesauri and classification schemes to ontologies of knowledge domains integrates semantic web principles into the description of data and introduces a controlled language for knowledge representation with a built-in logic. This also makes it possible to derive information which is not explicitly contained in the descriptive terms themselves (Antoniou et al. 2012: 4). To be a bit more specific, let’s give an example. The metadata established (or to be established) in this infrastructure will contain not only standardised metadata such as author, title, date, etc., but also and in fact more so domain specific metadata such as types of temporal logic, e.g. A-series and B-series logics, hybrid logic, metric and non-metric tense logic, and so on. These notions can only be established by domain experts and not by general information specialists. At the same time, they are exactly the kind of metadata that makes it possible to search and chart the kind of patterns that experts in the field are looking for.

New interface for PVL

It is our goal to make the PVL a research portal, where query results are presented to scholars through an interface that facilitates the identification of new relationships, identify patterns, and offer alternative ways of understanding and analysis. This work will build on concepts identified in Roued-Cunliffe’s (2011) research on Decision Support Systems for the reading of ancient documents. This research examines digital tools useful for the transcription, interpretation and publication of the Vindolanda Tablets (2010) from the Roman occupation in Britain. However, many of the conclusions are equally relevant for scholarship on other handwritten documents such as Prior.

Building the new interface comprises the task of bringing together Prior community practices with system design, metadata structure and the system’s affordances in terms of Prior researchers’ information seeking behaviour.

Conclusion

PVL as well as the general website concerned with Prior’s work and his archive in the Bodleian Library has without doubt already for quite some time been a useful DH infrastructure for researchers. This is evident not least in many papers from the Arthur Prior Centenary Conference, cf. (Albretsen et al. 2016a), to which the Nachlass material made available through PVL was crucial. Moreover, this infrastructure clearly could not have been developed without specific expertise on temporal logic and Prior’s work. PVL is, in all modesty, a showcase of how important humanities material kept in a research library can be digitised using domain knowledge (and indeed only when using domain knowledge), and made available and useful for the relevant research community, making up the Virtual Closed Collaborative Community. In this manner it also reflects an important characteristic of many research infrastructures for the humanities, namely a particularly strong call for domain expertise for their useful development. The perspectives for taking PVL to its next level raises some new information scientific, not to say epistemological, issues of great importance. The development of a relevant ontology together with search options and visualisations of search results as well as other PVL material is in fact not just about making powerful tools available for research in temporal logic and in Prior’s work; it is itself such research. The structure to be achieved is not neutral. It is itself a kind of theory about the internal coherence in Prior’s work, a “statement” about its overall architecture. This we intend to elaborate in a longer ensuing paper, but we hope to have established a convincing case to the effect that our infrastructure does indeed form a sufficient basis for studying some pertinent epistemological issues for DH.

References:

Albretsen, J., Hasle, P., and Øhrstrøm, P. 2016a. Special Issue on The Logic and Philosophy of A.N. Prior. Synthese. Volume 193 Number 11. Guest edited by Jørgen Albretsen, Per Hasle, and Peter Øhrstrøm. http://link.springer.com/journal/11229/193/11/page/1. Retrieved November 14, 2016.

Albretsen, J., Hasle, P., and Øhrstrøm, P. 2016b. The Virtual Lab for Prior Studies: An example of a Closed Collaborative Community, DRAFT paper. http://research.prior.aau.dk/anp/pdf/The_Virtual_Lab_for_Prior_Studies_article_draft.pdf. Retrieved November 14, 2016.

Antoniou, Grigoris, Groth, Paul, van Harmelen, Frank & Hoekstra, Rinke. 2012. A semantic Web primer. 3rd. Cambridge, Mass.: MIT Press.

Arthur Prior Centenary Conference. 2014. http://conference.prior.aau.dk/. Retrieved November 14, 2016.

DFF Grant. 2016. The Primacy of Tense: A.N. Prior Now and Then, funded 2016-2019 by the Danish Council for Independent Research | Humanities. DFF|FKK Grant-ID: DFF – 6107-00087. http://ufm.dk/forskning-og-innovation/tilskud-til-forskning-og-innovation/hvem-har-modtaget-tilskud/2016/bevillinger-fra-det-frie-forskningsrad-kultur-og-kommunikation-til-dff-forskningsprojekt-2-juni-2016. Retrieved November 14, 2016.

Nachlass. 2016. http://nachlass.prior.aau.dk. Retrieved November 14, 2016.

Priorstudies. 2016. http://www.priorstudies.org . Retrieved November 14, 2016.

PVL. 2016. http://research.prior.aau.dk. Retrieved November 14, 2016.

Roued-Cunliffe, H. 2011. A decision support system for the reading of ancient documents (Doctoral thesis). University of Oxford. https://ora.ox.ac.uk/objects/uuid:9d547661-4dea-4c54-832b-b2f862ec7b25 . Retrieved November 14, 2016.

Vindolanda Tablets. 2010. Vindolanda Tablets Online II. http://vto2.classics.ox.ac.uk. Retrieved November 14, 2016.

Recent publications by the first author, Volkmar Engerer:

Engerer, Volkmar (accepted 13 July, 2016): “Control and Syntagmatization. Vocabulary Requirements in Information Retrieval Thesauri and Natural Language Lexicons”, Journal of the Association for Information Science and Technology.

Engerer, Volkmar (im Erscheinen): „Informationswissenschaft für Linguisten. Die Sprache des Information retrieval“ (Akten der Gesus-Jahrestagung in St. Petersburg, Russland, 2015).

Engerer, Volkmar (im Erscheinen): „Das Vokabular zwischen Sprach- und Informationswissenschaft“ (Akten der Gesus-Jahrestagung in Brno, Tschechische Republik, 2016).

Engerer, Volkmar, (2016), “Exploring interdisciplinary relationships between linguistics and information retrieval from the 1960s to today”, Journal of the Association for Information Science and Technology, Article first published online April 4, 2016 (Early view). DOI: 10.1002/asi.23684.

Engerer, Volkmar, (2014), „Indexierungstheorie für Linguisten. Zu einigen natürlichsprachlichen Zügen in künstlichen Indexsprachen“, in: Schönenberger, Manuela, Volkmar Engerer, Peter Öhl & Bela Brogyanyi (Hgg.) (2014), Dialekte, Konzepte, Kontakte. Ergebnisse des Arbeitstreffens der Gesellschaft für Sprache und Sprachen, GeSuS e.V., 31. Mai – 1. Juni 2013 in Freiburg/Breisgau, Jena, pp. 61 – 74.

Engerer, Volkmar, (2014), „Thesauri, Terminologien, Lexika, Fachsprachen. Kontrolle, physische Verortung und das Prinzip der Syntagmatisierung von Vokabularen“, Information, Wissenschaft & Praxis, 65/2 (2014), pp. 99 – 108. [BFI 1]

Engerer, Volkmar, (2012), „Informationswissenschaft und Linguistik. Kurze Geschichte eines fruchtbaren interdisziplinären Verhältnisses in drei Akten“, SDV – Sprache und Datenverarbeitung. International Journal for Language Data Processing, 36/2 (2012), pp. 71 – 91 (= Hermann Cölfen (Hg.), E-Books – Fakten, Perspektiven und Szenarien)

——– LONG PAPER PRESENTATION ——–

Topics: Nordic Textual Resources and Practices

Keywords: topic modeling, mallet, swedish governmental official reports, gephi

Text Mining the History of Information Politics Through Thousands of Swedish Governmental Official Reports

Umeå University, Sweden

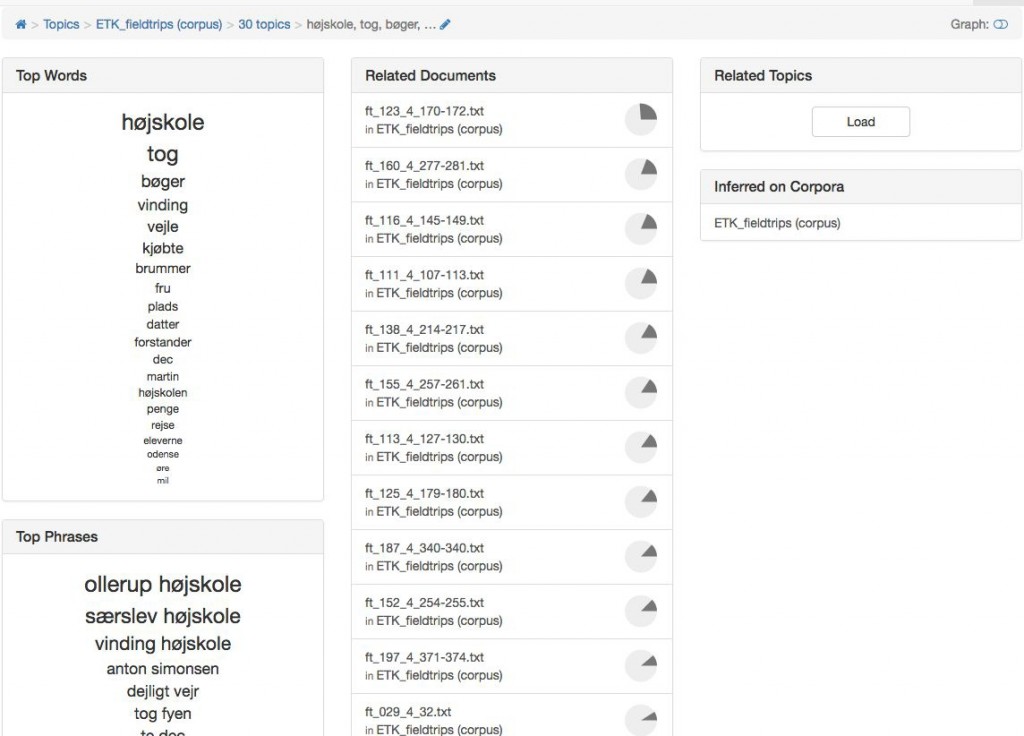

Why did “information”, a concept and a keyword that we take for granted in our modern vocabulary, infiltrate the official language in the twentieth century? In this presentation, I will show how the rise of the governmental information discourse, in the 1960s and the 1970s, can be understood within a larger theme of “development”, and how the concept of information became regarded as a silver bullet for the bureaucratic apparatus to tackle problems in society. This is done by topic modeling (with LDA/MALLET) the corpora of Swedish Governmental Official Reports (8 000 reports, 1922–), in particular by examining co-occurring topics, a less common approach within the practical use of probabilistic topic modeling. The scope and the long time-span of the report series makes it an internationally unique source, especially when it comes to study the emergence of interests and attitudes of a single state through time and, furthermore, to view it as the “voice” of the Swedish state. Today, when incalculable amounts of texts, like the report collection, not only are available online but also searchable––down to each single word––this presentation emphasizes the need for the humanities to accept the challenge of potentially re-rewrite parts of history. That is, how changes of language, in millions of documents, can be linked to—and create new understanding for—developments in society.

In collaboration with Humlab, the digital humanities hub at Umeå University, and software developer Roger Mähler, a method was developed that utilized the output data from MALLET which was expected to give insight into three things: 1) the number and diversity of reports that the information topic occurred in through time, 2) to discover and visualize larger cluster of topics and give the information topic a position, and hence a context, in those clusters, and 3) enrich the analysis by combining distant and close readings.

The Swedish Governmental Official Report series are available for public access at The Riksdag’s open data website (data.riksdagen.se), and part-of-speech tagged versions are available at Språkbanken (spraakbanken.gu.se) as downloadable XML-files. This study extracted the word stem of all nouns from the reports of the 1960s, 1970s and 1980s, which is sufficient for the study of themes in a text, and each report was split into chunks of 1 000 nouns each. MALLET was then used to compute three distinct LDA topic models, one for each decade, and each consisting of 500 topics. A manual review of the generated topics showed that each decade had been assigned a distinctive topic of a general information discourse. The computed average topic weights for each report, based on weights in each chunk, were used to visualize a network of all reports, and their most dominant topics, with weaker report-to-topic links filtered out based on a configurable threshold. Our pre-study showed that LDA topic modeling often computes a very dominant topic with a weight of over 60%, while the weight of the following topics dropped significantly. Hence, a generous threshold of 0.01 was proved to capture both a discursive core as well as its periphery.

The method showed three things. Firstly, a distinctive information discourse evolved over time in the official language in the state bureaucracy. By highlighting reports in which the information topic was dominant, it was clear that the number of co-occurring reports increased by a fourfold from the 1960s to the 1980s.

Secondly, the three datasets, one for each decade, were imported, separately, to Gephi. Each graph were modulated by the Closeness-Centrality, Force Atlas and Modularity Class algorithms to sort topics and reports into larger thematic clusters, that is meta-topics. In order to classify a meta-topic, every topic and report that belonged to a cluster were manually examined as a way to identify the common theme of the topic cluster. For example, one interesting result, which was found in the pre-study of the network graph of the 1970s, situated the information topic within a cluster that had been labeled as theme of development (of various political issues).

Thirdly, by adding close reading to the analysis, the actual reports in the cluster of “development”, presented concrete insights and illustrations of how to understand and synthesize the connection between “information” and “development” and how “information” became regarded as a universal tool for handling problems and challenges in society. Hence, the dynamic interaction between closeness and distance helped to strengthen both perspectives and enrich the end result.

——– LONG PAPER PRESENTATION ——–

Topics: Nordic Textual Resources and Practices

Keywords: teaching, learning, history, archives, skills

Teaching and learning the mindset of the digital historian and more: Scaffolding students’ critical skills in the digital humanities

Uppsala University, Sweden

Katherine Hayles (2012, p. 21) notes how “[y]oung people practice hyper reading extensively when they read on the web, but they frequently do not use it in rigorous and disciplined ways.” This is an important observation but what does this mean in practice? What does it mean to read in “rigorous and disciplined ways”, online and offline, and how can we support students habits of mind when they read and interpret sources and information from and about the past? In this paper I will present some empirical studies to highlight challenges and opportunities to support students’ success in navigating in the digital world of humanities.

Going to the sources and making sense of fragments from the past in archives, digital and analogue, demand historians to become experts in sourcing, corroborating and contextualizing the sources–and more. To better understand how experts and novices read historical documents we designed an eye-tracking study to track read differences between historians and students when they read historical documents from the time of the French revolution (Mulvey & Nygren, work in progress). We tracked eye movements of four historians, four university students, and eight high school students reading with an infrared non-intrusive eye-tracking camera. The stimuli contained four historical sources with information regarding human rights at the time of the French revolution. Sources were selected based upon their usefulness to test historical thinking (Wineburg, 1991); this means that they should be primary sources with important source information and hold valuable information for answering a complex historical question–challenging the reader to source, corroborate and contextualize the information.

The material participants in this study read included excerpts from four primary sources namely, (1) Declaration of the Rights of Man and Citizen, 26 August 1789, Paris, France; (2) The Declaration of the Rights of Woman by Marie Gouze [published under the pseudonym Olympe de Gouges], September 1791, Paris, France; (3) A particular account of the insurrection of the Negroes of St. Domingo, 1791, Paris, France; and (4) Haitian Declaration of Independence, January 1804, Gonaives, Haiti. In total there were 10 pages, 1828 words, for participants to read. The preliminary findings show that historians seem to focus more on sourcing and central historical aspects of documents, whereas high school students focus more on dramatic events and racist language. Guided by a central historical question in a directed reading, these patterns change, especially for university students, but also for high school students, who now read documents more in line with historians’ initial reading strategies. Our findings shed new light on how experts’ and novices’ historical literacy differs, and how these differences may relate to their abilities to critically scrutinize sources, corroborate evidence, and understand central aspects of historical events. Participants’ scores in the post-tests correlates to some extent with participants reading focus, indicating how a more professional focus may be scaffolded by a historical question in ways that can help students pay more attention to source information.

However, digital historians do not only closely scrutinize documents, they also use archives with large sets of data. In my talk I will also present some indications from quasi-experimental studies showing that novice users of Swedish digital archives may lose their awareness of their theoretical position and empathy when using large data sets (Nygren 2014). When facing a large set of data and statistics it may be an instinctive reaction to start sorting the information and quantifying, rather than reflecting on the starting point of your research and critical perspectives, thus conducting a more interpretive investigation. Close reading of a few documents may perhaps make it easier to hermeneutically scrutinize the information. However, using digital tools and material can also be used to closely analyze how smaller fragments fit into a bigger picture, but this takes a critical awareness of the materiality and a focus on the research question, which both students and researchers need to bear in mind when “going” to the archives.

Evidently it is possible for students to navigate digital databases and learn history in new ways using affordable technological resources. But this needs to be supported by hard and soft scaffolding (Brush & Saye, 2002). Hard scaffolds built into the databases can make databases more useful for other than just historians with expertise of the digital architecture. Soft scaffolds designed by teachers and historians can make it possible for students to use Swedish digital databases designed for professional historians (Nygren & Vikström, 2013; Nygren, Sandberg & Vikström, 2014). In previous studies we find that students can learn to walk in the shoes of the digital historian and use primary digitized sources in constructive ways, but they often stumble when it comes to contextualizing the information. A central aspect here is the challenge of historical empathy. Understanding people in the past by their own standards means that we need to contextualize the information and try to shift perspectives. There is a challenge to understand the past as a “foreign country,” a place where language and concepts as well as context differ in fundamental ways from our contemporary world (Lowenthal, 1985). This cognitive and emotional ability to understand unfamiliar perspectives across time and space, often labeled historical empathy, is a central but also complicated matter in historical studies (Davis et al 2001). Closeness and distance is vital in our understanding of the past and digital tools may help us see things in a larger perspective and also zoom in on selected parts in time and space. Closeness to primary sources and the environments studied may be a way to overcome temporal and spatial gaps of understanding. Materiality may certainly affect our construction of knowledge (Latour 1999) and researchers and students in digital history may benefit from physical reminders of the complexity of the fragmentary remains behind neat data. Mixing the digital with tangible materials may be an important scaffold to consider.

Digital tools can also be used to complement printed material, in ways that may help students and historians overcome the unreachability of the past so evident when going to the archives (Robinson 2010). Using visualizations makes it possible to organize the information in new ways, for instance linking it to geographical locations and on temporal scales. Digital tools can be used to collect different types of data and to explore relationships in time and space beyond what may be possible in more traditional explorations using pen and paper (Nygren, Frank, Bauch & Steiner, 2016). In digital history the writing of history may be more than creating text. With digital platforms, readers/users can create and present multiple interlinked narratives; integrate images, maps, commentary, and primary sources in the same field of vision; and curate and shape the reader/user’s experience, allowing for a hybrid experience (cf Thomas III & Ayers 2003). But as a final presentation, visualizations need to help the reader/user see behind the seductive cleanliness of data presentations and animations. It is also important to bear in mind how multiple narratives and multimodal presentations may confuse the reader/user rather than give a richer understanding of the topic. There may be a risk of cognitive overload for readers in hypertext environments (cf Gerjets & Sheiter 2003). All users need to learn to become critical readers and navigators in multimodal environments, and digital historians and students need to understand the audience in somewhat new ways when a digital tool becomes a publishing tool (Hayles, 2012). Students and historians need also be able to review scholarship in new media, if we want to make use of new opportunities and safeguard quality in historical scholarship and prepare students for a future in academia and beyond (Presner 2010; Nygren, Foka & Buckland 2014).

Last but not least we need to consider the uses of history in contemporary digital media. In an ongoing non-intrusive study of teachers contrasting contemporary uses of history with primary sources from the era of civil rights movement, we observe that students rarely critically scrutinize contemporary uses of the ideas of Martin Luther King Jr. when misinterpretations are underlined in the media (Nygren & Johnsrud, in review). For students, critically examining evidence seems to be difficult when authorities and media augment oversimplified and popular perceptions of the past. However, we also find that students can learn a more nuanced perspective on the life and deeds of MLK, findings still observable a year after the initial teaching took place. The results from this study show important potentials and limitations when trying to stimulate the learning of core content, critical thinking and values of social justice using primary historical sources and contemporary media representations that attempt to make the past historical and practical. Connecting the past to the present and critically scrutinizing contemporary media is asking more from students than what we ask from historians. And historians actually do not seem to be very good at critically scrutinizing online information (Wineburg & McGrew, 2016). We need to better understand this challenge and how to deal with this in schools and academia in a digital age.

Scaffolding students to read and write with the affordances offered by the digital humanities is a certainly a tall order for teachers. To make this challenge a bit less complex I suggest a focus on a few central mindsets, namely, skills to criticize, empathize and create. Having the skill to criticize means that students, and scholars, in the digital humanities need to be able to: critically examine and corroborate various types of sources (such as text, image, and audio), critically read between the lines, read close and distant, critically explore and experiment with various digital tools, understand different critical and ethical perspectives, and formulate critical questions. This critical mindset is a central part of being a rigorous reader in the digital humanities. A skillful reader is also able to empathize with multiple perspectives. This central aspect of the humanities involves classic challenges to understand: historiography, different human perspectives, not least the mind of the author, the reader, the creator and ordinary people in foreign cultures and countries. Today this means not least understanding human existence and making in analog and digital worlds. This means that students must learn to contextualize the information and empathize in cognitive and emotional ways with distant worldviews. Last but not least students in digital humanities need to create accounts to process and communicate their thoughts in nuanced ways. Writing is still a central skill. But in the digital humanities there are now opportunities to think, make, enact and experiment in a diversity of forms and in collaborations. Some ideas might certainly benefit from being treated and presented in non-textual ways. But how do we stimulate all this in practice? The answer today is that we do not really know, and we need more empirical studies to connect our theoretical understanding to the learning of students.

It is time to move beyond anecdotal evidence about how to support learning in the digital humanities. The research presented here provides some small insights into the potentials and pitfalls of teaching and learning critical mindsets useful in the digital humanities. This research highlights how it is possible for, at least some, students to learn to read like historians, navigate digital archives and deconstruct contemporary media myths about the past. But our research also highlights the complexity of teaching and learning in the digital humanities, how little we know, and how important it is to support students’ humanistic habits of mind.

References

Brush, T. A., & Saye, J. W. (2002). A summary of research exploring hard and soft scaffolding for teachers and students using a multimedia supported learning environment. The Journal of Interactive Online Learning, 1(2), 1-12.

Davis, O. L., Yeager, E. A., & Foster, S. J. (Eds.). (2001). Historical empathy and perspective taking in the social studies. Rowman & Littlefield.

Gerjets, P., & Scheiter, K. (2003). Goal configurations and processing strategies as moderators between instructional design and cognitive load: Evidence from hypertext-based instruction. Educational psychologist, 38(1), 33-41.

Hayles, N. K. (2012). How we think: Digital media and contemporary technogenesis. University of Chicago Press.

Latour, B. (1999). Pandora’s Hope: Essays on the Reality of Science Studies. Cambridge, Mass.: Harvard University Press

Lowenthal, D. (1985). The Past is a Foreign Country. Cambridge: Cambridge University Press.

Nygren, T. (2014). Students Writing History Using Traditional and Digital Archives. Human IT 12 (3): 78–116.

Nygren, T., Foka, A., & Buckland, P. I. (2014). The status quo of digital humanities in Sweden: past, present and future of digital history. H-Soz-Kult.

Nygren, T., Frank, Z., Bauch, N. & Steiner, E. (2016) Connecting with the Past: Opportunities and Challenges in Digital History, in Research Methods for Creating and Curating Data in the Digital Humanities, eds. M. Hayler & G. Griffin, Edinburgh University Press, 2016, 62–86.

Nygren, T., & Vikström, L. (2013). Treading old paths in new ways: upper secondary students using a digital tool of the professional historian. Education Sciences, 3(1), 50-73.

Nygren, T., Sandberg, K., & Vikström, L. (2014). Digitala primärkällor i historieundervisningen: en utmaning för elevers historiska tänkande och historiska empati. Nordidactica: Journal of Humanities and Social Science Education, (2), 208-245. [Digital primary sources in history education: A challenge for students’ historical thinking and historical empathy]

Presner, T. (2010). Digital Humanities 2.0: a report on knowledge. Connexions Project.—2010.

Robinson, E. (2010). Touching the Void: Affective History and the Impossible, Rethinking History: The Journal of Theory and Practice, 14:4, 503-520.

Thomas III, W. G., & Ayers, E. L. (2003). The differences slavery made: A close analysis of two American communities.

Wineburg, S. & McGrew, S. (2016) Why Students Can’t Google Their Way to the Truth, Education Week, 36(11), 22, 28

——– LONG PAPER PRESENTATION ——–

Topics: The Digital, the Humanities, and the Philosophies of Technology

Keywords: Comics, Digital aesthetics

Vectors or Bit Maps? Brief Reflection on Aesthetics of the Digital in Comics

Gothenburg University, Sweden

In recent years, researchers from various disciplines have contributed to the emerging study of the digital in comics (see Goodbrey 2013; Digital Humanities Quarterly 2015). Scholars from film and media studies, for example, have demonstrated the uses of film theory that deals with circulation (production, distribution and consumption) for thinking about digital comics and web comics (see Werschler 2011). Others have written on issues of digital mediatisation, drawing on theories of adaptation and animation (see Burke 2014). However, scholars have shown less interest in how film theory can be useful to explore the aesthetics of the digital in comics (for another study of media forms in digital humanities that utilizes film theory, see Ng 2015).

The aim of this paper is to briefly reflect on this topic, drawing on Sean Cubitt’s prolific study on the history of moving images from a digital perspective, The Cinema Effect (2004). Cubitt’s book is grounded in the concepts pixel, cut and vector. Concisely put, pixel describes the cinematic image’s appearance. Cut concerns how images are organised and differentiated through a film. Schematically transferred to the medium of comics, theses concepts may designate the visual elements of images and grids, respectively. My interest lies in the vector, which concerns the relation between the image and the interpretative mind. In computer graphics the vector is a line drawn from the centre of the screen, connecting programmed points and existing only temporarily as it leaves behind trails of light. Cubitt utilizes the analogy of the disappearing line to conceptualize thinking in the cinema as a vector-like process that links images in space and time, drawing on the viewer’s experience of the flow of images. However, writing on digital effects driven Hollywood cinema Cubitt also uses the concept of the bit map to argue that what he regards as the vector’s principle of openness has moved into something more fixed. Through the bit map he describes how cinema in the digital-era has become not only more visually spectacular but also more composed and controlled, not least since serendipity is harder to achieve on a computer, an instrument of precision (2004: 251).

Given the limited space of the paper, it is hard to address the complications of exporting theoretical concepts from one medium to another or the fundamental differences between cinema and comics. Nor will I engage with the ideological argument Cubitt develops concerning the qualities of the bit map. However, it should be noted that he presents his concepts in response to a medium with some kind of indexical relation to reality, a relation created by the cinematographic apparatus that seems to capture events. But as Cubitt himself writes, digital cinema and other digital media do not primarily refer, they communicate (2004: 250). The same could be said about comics (both pre-digital and digital) and I simply want to use Cubitt’s concepts, the vector and the bitmap, in order to tease out some brief reflections on the aesthetics of the digital in comics.

Digital Colouring and Multimedia Styles

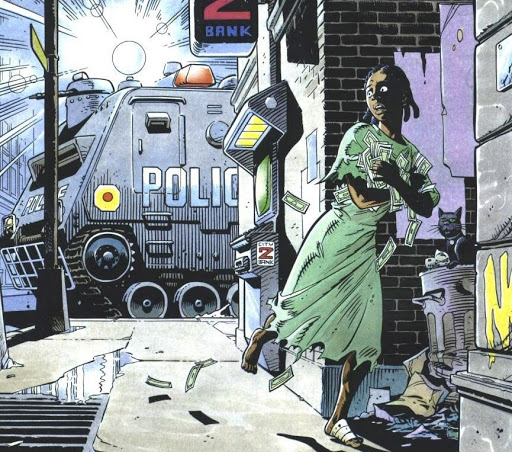

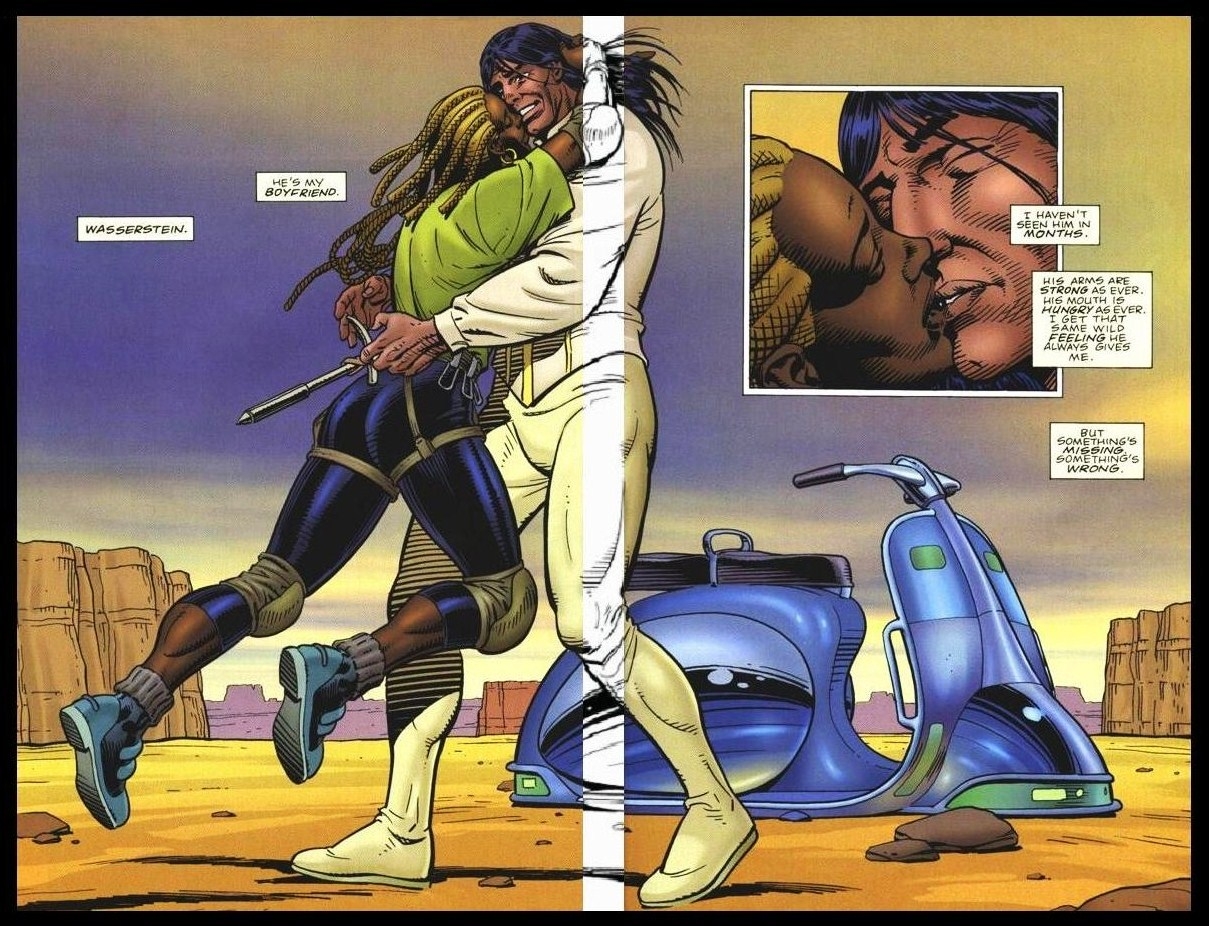

I will draw on two examples from mainstream comics. The first one concerns the breakthrough of digital colouring in the mid-1990s. In 1990 Frank Miller, the auteur behind iconic graphic novels such as Dark Knight Returns (1986), collaborated with artist Dave Gibbons of Watchmen (1986–1987, author Alan Moore) fame, on the dystopian action/satire series Give Me Liberty for Dark Horse Comics. A sequel, Martha Washington Goes to War, was published in 1994 and Gibbons’ idiosyncratic style, a combination of cartoonish and mundane realism, characterized both runs. But there were also significant differences. For example, on Martha Washington Goes to War Gibbons used a less gritty style, a choice that tied in with Miller’s more fantastical, high-concept storytelling. However, what is most interesting here is that whereas the colours in Give Me Liberty were hand-painted on watercolour paper with the then-advanced blue-line method, computer rendering was used in Martha Washington Goes to War. Simply by looking at the clean, smooth colour schemes the attentive reader could see that digital graphics was used.

Figure 1: Give Me Liberty (1990)

Figure 2: Martha Washington Goes to War (1994)

To some extent, this ties into how Cubitt associates the bit map with a more precisely composed aesthetic universe. In Martha Washington Goes to War there is even, arguably, a visible tension between Gibbons’ old-fashioned hatched line drawings and the slick, heavily graded colour palette. One can discern similar tensions in later works by Gibbons, such as the subsequent Give Me Liberty runs (1995–2007) or the high-concept spy thriller/parody The Secret Service (2012, author Mark Millar), and conspicuous uses of digital colouring have generally become a prominent element in mainstream comics.

It is worth pointing out that the aesthetics of digital colouring depends on the approach. The use of rendering, which has a certain three-dimensional feel, differs from a flat colouring approach, which tends to have more of an old-school feel. Moreover, most artists working in mainstream comics today combine digital and classic hands-on approaches. For example, on The Secret Service Gibbons used watercolour brushes and Indian ink as well as digital graphic tablets.

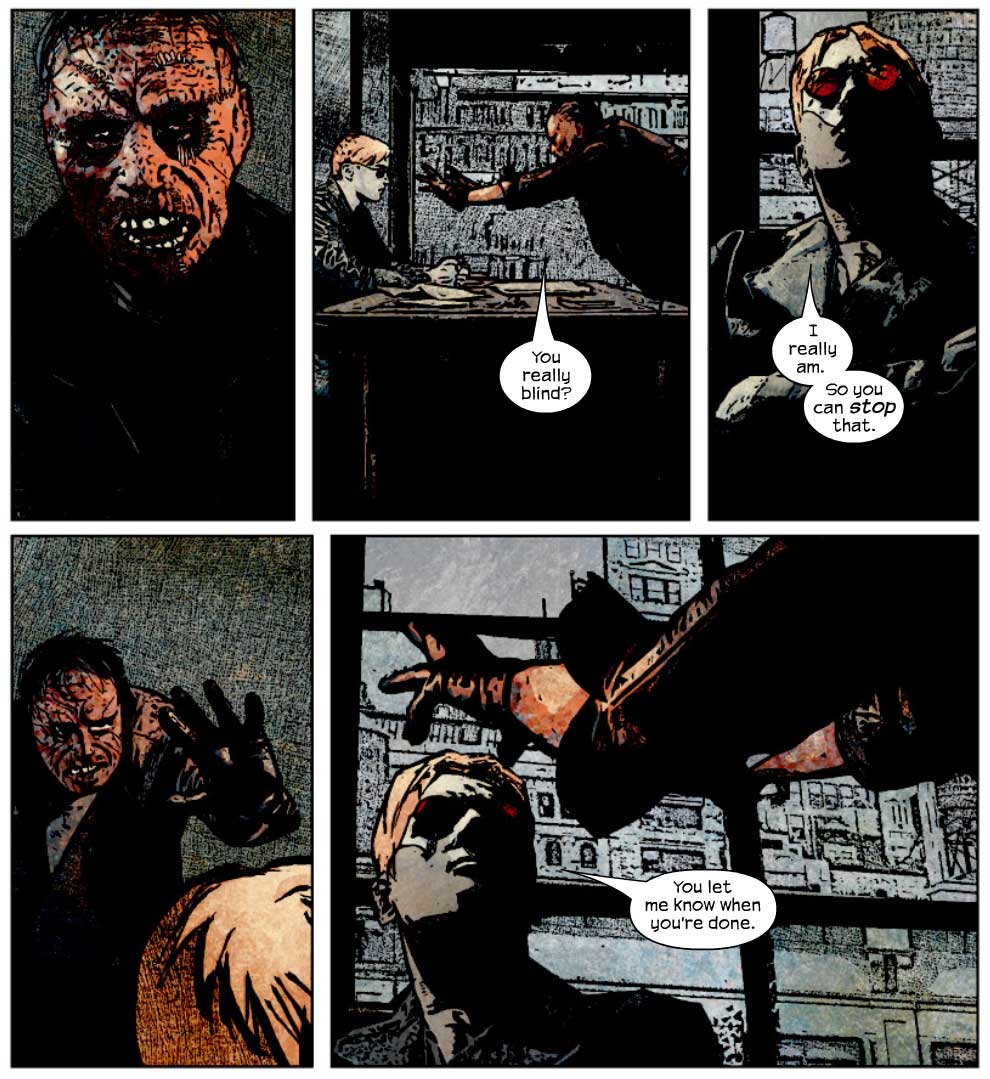

My second example concerns this kind of hybrid aesthetics; writer Brian Michael Bendis and artist Alex Maleev’s acclaimed run of Marvel Comics super-hero book Daredevil (2001–2006). Here, I should stress a point Cubitt makes; that the “distinction between bit map and vector […] so dear to first-year classes in computer graphics, is now approaching obsolescence” (2004: 249). According to Cubitt, the differences between the two principles no longer lie on “the familiar axis of verisimilitudinous painting and abstraction but along a line stretching from cartography at one end to architecture at the other. Somewhere in between lie the fields of virtual sculpture and computer-aided design manufacture” (ibid). This idea seems somewhat pertinent to Bendis and Maleev’s Daredevil run. Maleev has been described as one of a new, graphically astute breed of multimedia artists, who incorporate painting, drawing and cartooning as well as photography, collage and computer effects to expand the visual universe of their works (Schumer 2005). Combining an angular, sketchy approach and photorealism (working from photographs of models and cityscapes), he has crafted a style, which goes beyond established modes of realism in mainstream comic books, yet retaining an organic feel.

Figure 3: Daredevil no. 62 (2003)

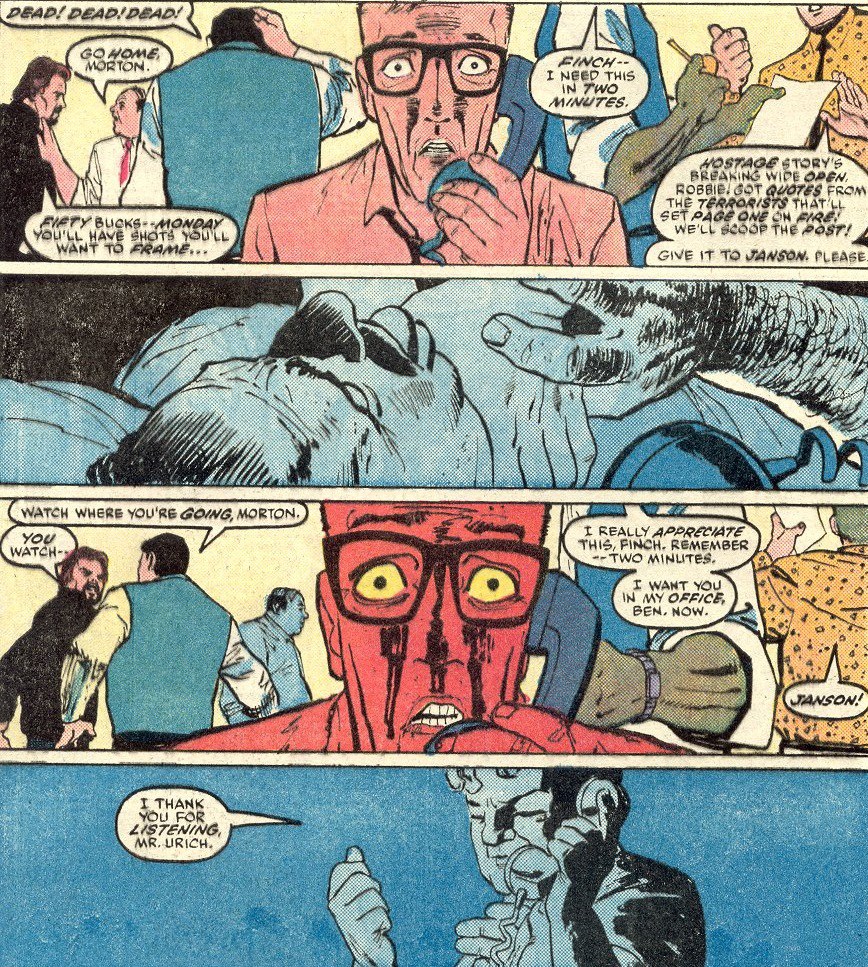

It is tempting to mainly describe Maleev’s images in terms of a higher degree of realism. Arguably, they have another quality of verisimilitude compared to, for example, the artwork of Frank Miller and David Mazzucchelli’s defining Daredevil mini-series Born Again (1986), which is also characterized by gritty and realistic but nevertheless non-photorealistic stylization.

Figure 4: Daredevil no. 229 (1986)

However, Cubitt’s claim that the difference between the bit map and the vector should not simply be described from an axis of verisimilitudinous painting and abstraction complicates matters. Though it would not be accurate to place Maleev’s images in a field equivalent to the one Cubitt associates with digital cinema (in between virtual sculpture and computer-aided design manufacture), as the analogies become a little bit “off” in the context of the comics medium, it nevertheless seems reasonable to propose that Maleev’s imagery exists in an aesthetic border zone in which Miller and Mazzucchelli’s pre-digital Daredevil mini-series do not.

Conclusion

The aesthetics of the digital has brought new visual elements to comics, such as computer rendering of colours, but also hybrid, multimedia aesthetics, which do not necessarily on the surface seem that different from the ones of yesterday. It would probably not be impossible to create images with similar qualities to those of Maleev’s without the use of computers, but arguably it would require more work and time. In this perspective, what digital technology has perhaps enabled is an economy within the comics industry with which spectacular images can be manufactured (c.f. Cubitt 2004: 248). But some differences between pre-digital and digital aesthetics in comics might also lie somewhere along a line that stretches toward absolute precision and control.

Bibliography

1. Burke, L. (2016): “Sowing the Seeds: How 1990s Marvel Animation Facilitated Today’s Cinematic Universe”, M J McEniry, R Moses Peaslee & R G Weiner (eds), Marvel Comics into Film: Essays on Adaptations Since the 1940s, London: McFarland,

2. Cubitt, S.(2004). The Cinema Effect. Cambridge, Mass: MIT Press.

3. Digital Humanities Quarterly (2015), 9:4. [Issue on digital comics]

4. Goodbrey, D. (2013). ”Digital Comics: New Tools and Tropes”. Studies In Comics, 4:1.

5. Ng, J. (2015). ”The Cut between Us: Digital Remix and the Expression of Self”. In Svensson, P. & Goldberg, D T. (eds), Between Humanities and the Digital. Cambridge, Mass: MIT Press.

6. Schumer, A. (2005). “Super-hero Artists of the Twenty-first Century: Origins”. In Dooley, M. & Heller, S. (eds), The Education of Comics Artists. New York: Allworth Press.

7. Werschler, D. (2011). “Digital Comics, Circulation, and the Importance of Being Eric Sluis”. Cinema Journal, 50:3.

——– LONG PAPER PRESENTATION ——–

Topics: Nordic Textual Resources and Practices

Keywords: newspapers, digital resources, accessibility, research use

New multi-language digitised newspapers and journals from Finland available as data exports for Nordic researchers

Tuula Pääkkönen, Jukka Kervinen

National Library of Finland, Centre for Preservation and Digitisation

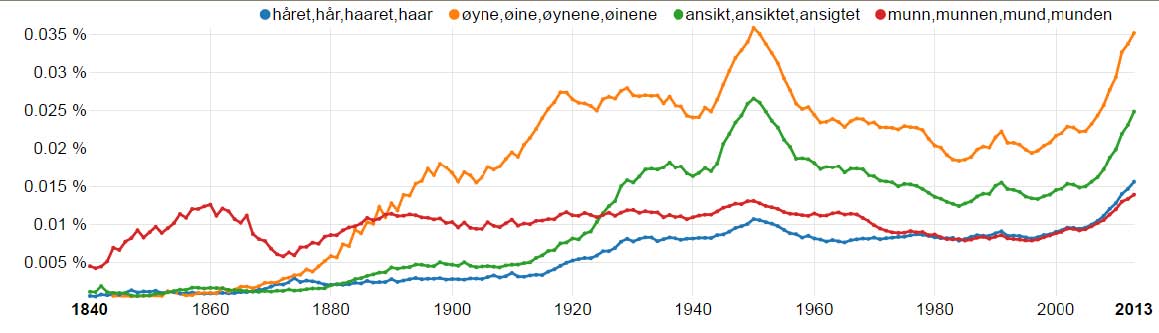

Abstract